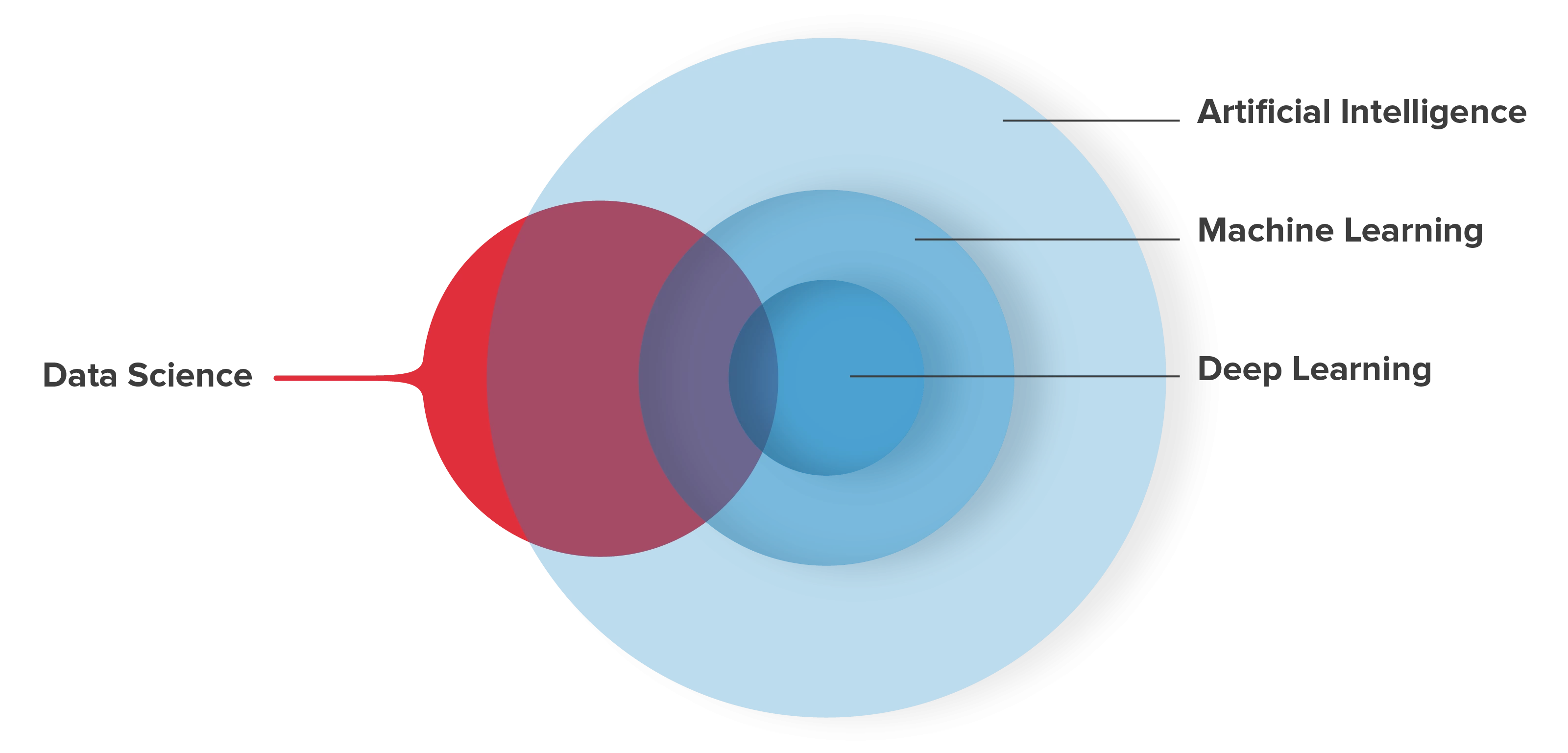

Machine Learning (ML) is used in Artificial Intelligence (AI) as well as in Analytics and Data Science.

There are three types of machine learning: Supervised Learning, Unsupervised Learning and Reinforcement Learning.

Supervised Learning: With Supervised Learning, you have input data called features and the expected result called label. It allows us to make predictions based on a model* created from historical data and a chosen algorithm.

Supervised learning attempts to answer two questions:

- Classification: “which class?”; and

- Regression: “how many?”.

Unsupervised Learning: With Unsupervised Learning, you still have features, but no label, since we are not trying to predict anything.

Unsupervised Learning involves mining historical data to see what can be learned from them, then validating the conclusions reached with subject-matter experts.

This kind of Machine Learning is used to discover data structures and patterns. It can also be used for Feature Engineering when preparing data for Supervised Learning (more on this later).

Reinforcement Learning: With Reinforcement Learning, you start with an agent (algorithm) that has to choose from a list of actions. Then, according to the chosen action, it will receive a feedback from the environment (it may be a human in certain situations or an another algorithm): either a reward for a good choice or a penalty for a wrong action. The agent (algorithm) learns which strategy (or choice of actions) maximizes a cumulative reward.

Reinforcement Learning is often used in robotics, games theory and self-driving vehicles.

The main steps to take are:

Data Preparation

- Data collection: first, gather the data you’ll need for Machine Learning. Make sure you collect them in consolidated form, so that they are all contained in a single table (Flat Table).

- Data Wrangling: it involves prepping the data to make them usable by Machine Learning algorithms.

- Data Cleansing: find all the “Null” values, missing values and duplicate data. Replace “Null” and missing values by other data (or delete them) and make sure you have no duplicates.

- Data Decomposition: text columns sometimes contain more than one element of information; split them up in as many dedicated columns as necessary. If some columns represent categories, convert them in dedicated category columns.

- Data Aggregation: grouping data together, when appropriate.

- Data Scaling: this will yield data on one common scale if it’s not already the case. Data Scaling does not apply to label or categorical columns. Required when there is a large variation with features ranges.

- Data Shaping and Transformation: categorical to numeric.

- Data Enrichment: Sometimes, you’ll need to augment existing data with external data to give the algorithm more information to work with, improving the model (for example, economic or meteorological data).

Feature Engineering

- Visualise your data as a whole to see if there are any links between columns. By using charts, you can see the features side-by-side and detect any links among features, and between features and labels.

- Links between features allow us to see if any given feature is directly dependent on another. If so, you may not need both features.

- Links between one feature and the label allow us to see if any feature will have a great impact on the result.

- Sometimes, you’ll need to generate additional features from existing ones in a classification (for example, when the chosen algorithm is unable to correctly differentiate classes).

- You can end up with a huge number of columns. In this case you need to choose the columns you will use as features, but if you have thousands of columns (i.e. potential features), you’ll need to apply Dimensionality Reduction. There are several techniques available to do this, including Principal Component Analysis or PCA. PCA is an unsupervised learning algorithm that uses existing columns to generate new columns called principal components, which can be used later by the classification algorithm.

Algorithm Choice

At this stage, you can start training your algorithms, but first:

- Split up your dataset in three parts: Training, Testing and Validation.

- Training data will be used to train your chosen algorithm(s);

- Testing data will be used to check the performance of the result;

- Validation data will be used at the very end of the process, or if necessary, rarely looked at or used before that, in order to avoid introducing any bias to the result.

- Choose the relevant algorithm(s).

- Try the algorithms with different combinations of parameters and compare their results performance.

- Use Hyper-parameters (ex. GridSearch in Python) procedure to try many combinations, in order to find the one that yields the best result (do not try manual combinations).

- As soon as you’re reasonably happy with a model, save it even if it’s not perfect, since you may never find again the combination that made it.

- Continue testing after every new model. If the results are not satisfactory, you can start over:

- At the Hyper-parameters stage, if you’re lucky.

- It is also possible that we need to start again the choice of Features to include or even worse, to review the state of our data (check if we don’t have problems with normalization, regularization, scaling, outliers…).

Remember that after all this, Machine Learning may not yield a better result than the one you obtained through traditional processes. But, at worst, it will definitely provide interesting information thanks to the data analysis process it makes you go through.

(*) Model: the result obtained by analyzing data with an algorithm and any given combination of parameters, supposing the model is a mathematical function y = f(x), where x is a feature, y is a label and f(x) is the model.